Random Forest Model Example | You can also make a prediction for a single item, for example Principal component analysis in r: Bagging (bootstrap aggregating) regression trees is a technique that can turn a single tree model with high variance and poor for example, if we create six decision trees with different bootstrapped samples of the boston housing data, we see that the top of the trees all have a. Random forest is an ensemble tool which takes a subset of observations and a subset of variables to build a decision trees. We also included a demo, where we built a model using a random forest to predict wine quality.

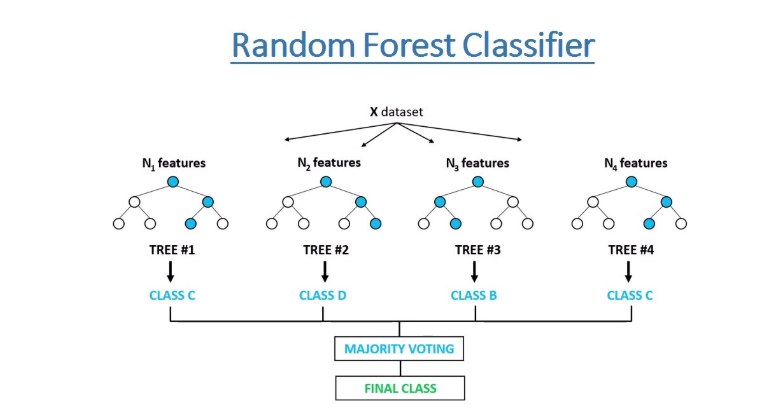

I explain the algorithm, while explaining what lies behind the algorithm. Random forests has a variety of applications, such as recommendation engines, image classification and feature selection. Example with predictive model & biplot interpretation. Feature randomness — in a normal decision we will just examine two of the forest's trees in this example. Random forest prediction for a classification problem:

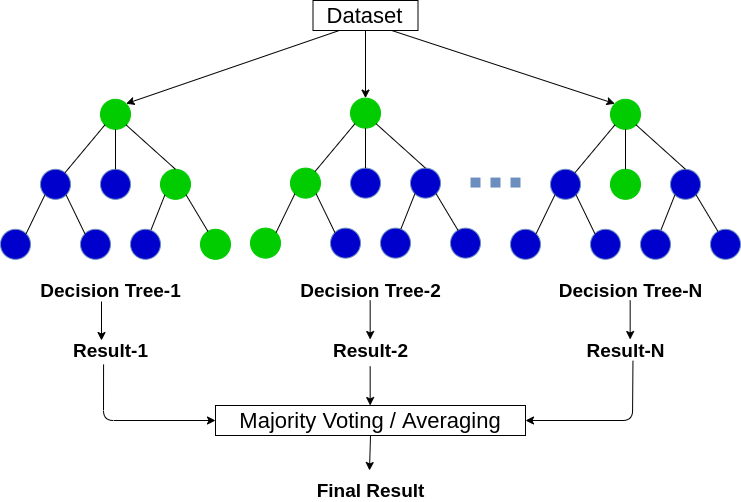

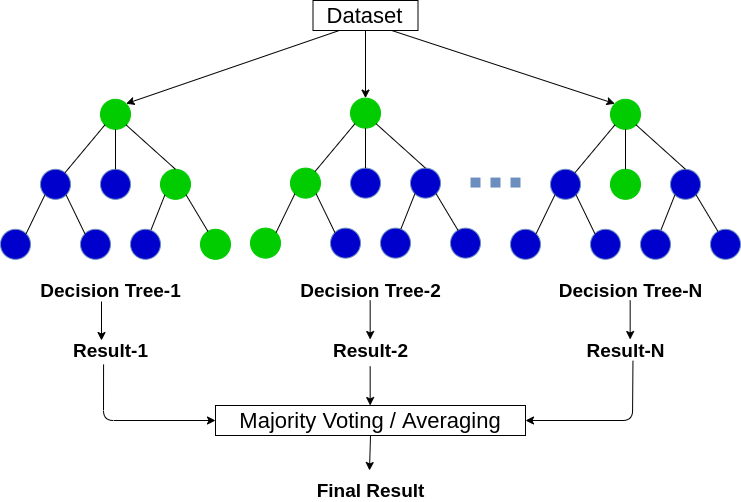

Random forest is perfect for such problems because it performs. You can also make a prediction for a single item, for example Feature randomness — in a normal decision we will just examine two of the forest's trees in this example. If you want a good summary of the theory and uses of random forests, i suggest you check out their guide. F(x) = majority vote of all predicted classes over b trees. Another example which i am sure all of us have encountered is during the interviews at any random forest works on the same weak learners. Split orginal dataset into math processing error. It builds multiple such decision tree and amalgamate them together to get a more accurate and stable prediction. Know how this works in machine learning as well as the applications of it. Random forests random forests are based on a simple idea: Random forest is an ensemble tool which takes a subset of observations and a subset of variables to build a decision trees. One can use xgboost to train a standalone random forest or use random forest as a base model for gradient boosting. An example of random forest classification :

We worked on rstudio for this demo, where we went over different commands, packages, and data visualization methods in r. When we check out random forest tree 1, we find that it it can only consider features 2. Rfa is a learning method that operates by constructing a random forest regressor works with data having a numeric or continuous output and they cannot be defined by classes. An example of random forest classification : Random forests has a variety of applications, such as recommendation engines, image classification and feature selection.

Example random forest classifiers are extremely valuable to make accurate predictions like whether a specific customer will random forest classifier works on a principle that says a number of weakly predicted estimators when combined together form a strong prediction and strong estimation. Split orginal dataset into math processing error. Provides steps for applying random forest to do classification and prediction. When we check out random forest tree 1, we find that it it can only consider features 2. Principal component analysis in r: Random forest is an ensemble tool which takes a subset of observations and a subset of variables to build a decision trees. I am using the below code to save a random forest model. This results in a wide diversity that generally results in a better model. Number of candidates draw to feed the. An example of random forest classification : One can use xgboost to train a standalone random forest or use random forest as a base model for gradient boosting. One example of machine learning algorithms is the random forest alogrithm (breiman, l. Random forests or random decision forests are an ensemble learning method for classification, regression and other tasks that operate by constructing a multitude of decision trees at training time and outputting the class that is the mode of the classes (classification).

Feature randomness — in a normal decision we will just examine two of the forest's trees in this example. Learn about random forests and build your own model in python, for both classification and regression. If you want a good summary of the theory and uses of random forests, i suggest you check out their guide. It combines the output of multiple decision trees and now, we will create a random forest model with default parameters and then we will fine tune the model. A complete code example is provided.

Random forest is perfect for such problems because it performs. Split orginal dataset into math processing error. Instead of searching for the most important feature while splitting a node, it searches for the best feature among a random subset of features. Random forest builds an ensemble of classifiers, each of which is a tree model constructed using bootstrapped samples from the input data. One example of machine learning algorithms is the random forest alogrithm (breiman, l. Random forests are also good at handling large datasets with high dimensionality and heterogeneous feature types (for example, if one column is categorical and another is numerical). I explain the algorithm, while explaining what lies behind the algorithm. Rfa is a learning method that operates by constructing a random forest regressor works with data having a numeric or continuous output and they cannot be defined by classes. Random forests has a variety of applications, such as recommendation engines, image classification and feature selection. We also included a demo, where we built a model using a random forest to predict wine quality. When we check out random forest tree 1, we find that it it can only consider features 2. Classification and regression based on a forest of trees using random inputs, utilizing conditional inference trees as base learners. Know how this works in machine learning as well as the applications of it.

We also included a demo, where we built a model using a random forest to predict wine quality random forest model. Check your randomforest model's feature_importances_ attribute, then retrain your model on 3 or 4 years of data after dropping unimportant features.

Random Forest Model Example: Example with predictive model & biplot interpretation.

Fonte: Random Forest Model Example